Mobile marketing has never had a greater divide between what your data states and what your campaign is actually delivering. Attribution, which was once the bedrock of performance measurement, has become more unreliable than ever. With Apple rolling out App Tracking Transparency (ATT), the evolution of SKAdNetwork, and increasing limits on cross-app identifiers, marketers find themselves in a landscape where data loss is the rule, not the exception.

The consequences of this are very real. You are potentially spending budget on channels or creatives that don’t create incremental growth. You are probably scaling campaigns that show your dashboards working but won’t drive net-new users. And, you are likely scrambling to justify ROI as you have nowhere near enough evidence to prove what is really working.

This is exactly why incrementality testing is being brought to the forefront of attention in Q1 FY25. Incrementality isn’t merely a measurement technique, but a strategic framework to help you separate the actual outcome, reduce fade from your performance metrics, and make confident decision-making in a privacy-first ecosystem.

In this guide, we’ll cover what incrementality testing is, how it works, and how you can apply to your iOS, Android, and cross-platform campaigns. You’ll discover how platforms such as Apptrove are helping scale privacy-compliant testing at a time when marketers have identified their need for clarity as never before.

Why Marketers Are Finally Prioritising Incrementality Testing

There’s a silent reckoning happening in mobile marketing. For years, attribution reports have been treated as holy scripture. Clicks received credit, impressions were weighted, conversion windows existed to dictate what works and what does not. The numbers were clean, until they weren’t anymore.

Incrementality testing has evolved not just into a necessity for mobile marketers, but a necessity that has a strategic priority. Given the increasingly privacy-restricted and signal-poor digital ecosystem, marketers are tasked with the increased demand for marketers to prove their campaign’s actual impact.

While traditional forms of attribution exist, they offer marketers insufficient clarity, let alone comfort, in performance assessments. Incrementality testing provides clarity for many of the traditional digital marketing principles by identifying the true causal effect of marketing activities, hence allowing performance teams to isolate what campaigns generate net-new conversions and which do not.

This increase is driven by three major contributors: the decline of deterministic attribution, the rise of privacy-first measurement protocol and an increased urgency to optimize ad spend in the wake of increased economic scrutiny. The resounding conclusion is that marketers require systems of measurement that convey precision, compliance, and business impact and incrementality testing ticks all three boxes.

1. Attribution Is No Longer Sufficient

Attribution models were originally established based on an ecosystem whose tracking was persistent and user-based. Within such a context, last-click, multi-touch, and even probabilistic models provided directional insight around users. With changes in operating systems like Apple’s App Tracking Transparency (ATT) and SKAdNetwork (SKAN), the assumptions about the models have been severely disrupted.

Currently, attribution has some major limitations:

- Signal degradation: Access to user identifiers is at an all time low, and attribution data is often incomplete or modeled.

- Channel overlap: Users exist in many different touchpoints across web, app, and CTV, making it tough to assign credit correctly.

- Over-attribution risk: Attribution models can attribute conversions to campaigns that did not meaningfully influence the outcome.

Marketers have a great risk of misallocating large portions of their budgets if they do not follow a methodology that separates correlation from causation.

2. Privacy Regulations Are Redefining Measurement Frameworks

Policies around data collection have fundamentally changed what it means to be able to act on data. With Apple launching the ATT framework, and the movement towards a global privacy-based data policy (GDPR, CCPA, etc.), marketers don’t have the complete picture anymore.

Even with SKAN workarounds or aggregated reporting, marketers have to now make decisions with less deterministic data, fewer identifiers, and shorter attribution windows.

While these changes will impact reporting, they will also impact how you plan your campaigns, set KPIs, and demonstrate value. Since then marketers have dealt with:

- Lack of access to user-level insight

- Increased reliance on aggregate, anonymised reporting

- Delayed postbacks and reduced granularity

Incrementality testing provides a compliant alternative. Utilising group-based experiments as opposed to measuring individuals it assesses performance based on statistical outcomes. This design will naturally conform to the privacy requirements providing not only a valid measurement tool but a measurement tool for the future.

3. Economic Pressure Is Driving a Focus on Verified ROI

With marketing budgets under scrutiny, especially in capital-sensitive verticals like fintech, gaming, and eCommerce, performance teams must now justify every dollar spent. The ability to demonstrate lift, rather than simply activity, is becoming the baseline for decision-making.

Incrementality testing enables:

- Smarter budget allocation: By identifying campaigns that drive net-new users or purchases.

- Creative and channel testing at scale: Without dependence on modeled data.

- Confidence in pause/scale decisions: With statistically valid control groups validating outcomes.

In this way, incrementality testing is not just a measurement method, it becomes a strategic lever for performance optimisation.

4. From Measurement to Strategy: A Shift in How Marketers Operate

The broader implication of incrementality testing lies in how it changes the role of measurement. Rather than serving as a retrospective report, incrementality enables:

- Forward-looking strategy: Insights that inform future investment decisions, not just evaluate past ones.

- Cross-functional alignment: Shared understanding of impact across marketing, product, and finance teams.

- Agility in experimentation: Rapid testing of creatives, offers, channels, and messaging, backed by validated results.

This aligns directly with how high-performing marketing organisations are evolving. Measurement is no longer a passive report. It is a decision-making engine.

What Is Incrementality Testing in Mobile Marketing?

In essence, incrementality testing assesses whether your marketing efforts have added value or just captured the value that would have happened regardless.

Imagine you ran an ad campaign that resulted in 10,000 installs for your app. Attribution could report that your campaign drove all 10,000 installs, but what if 7,000 of those users would have installed the app without seeing your ad? That would mean that your actual impact, which is your incremental lift, was only 3,000 installs.

Incrementality establishes the difference between causation and coincidence. It measures the extra conversions your campaign created above the baseline of what would have happened organically. This is what makes it so powerful, especially as attribution continues to face headwinds and privacy concerns further reduce visibility.

When you start focusing on causation, you stop crediting ad spend for results it hasn’t actually driven. You stop scaling campaigns that were simply harvesting intent rather than creating intent. You start curating your media strategies on results that build your organization and not just those that build cool dashboards for reports.

The Core Concept: Test vs. Control

Incrementality testing is an experiment at its core. It splits your audience into two groups:

Test group: Users who are exposed to your ad

Control group: Similar users that are held out of the experiment and do not see your ad

And by measuring the conversion rates of the two groups, you can isolate the true lift the campaign has created.

Formula:

Incremental Lift = (Conversion Rate in Test Group) – (Conversion Rate in Control Group)

If the two groups convert at the same rate, your campaign did not create any incrementality. If the test group converts at a higher rate than the control group, you have proven your campaign created incrementality, and by exactly how much.

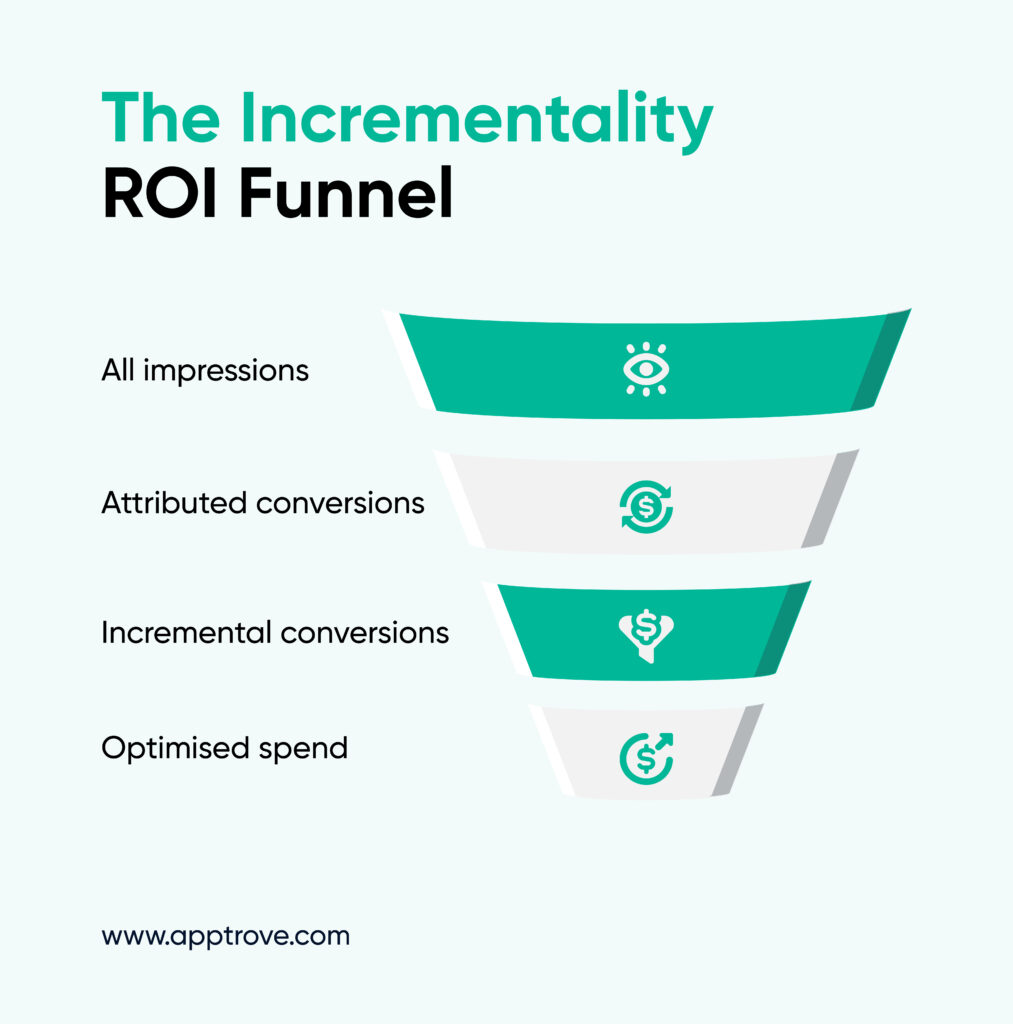

How Incrementality Complements Attribution

To be clear, incrementality testing is not a substitute for attribution. Attribution and incrementality represent two different uses.

While attribution demonstrates where a conversion actually came from, incrementality demonstrates whether that source actually caused the conversion.

When both are used in tandem, they provide a fuller picture of performance. Attribution helps you illuminate the journey; incrementality helps you understand what actually drove the outcome.

Different Types of Incrementality Testing and When to Use Them

By now, you’ve got the gist of what incrementality testing is, and why it is important. But a bit of a catch os that there isn’t one simple way to test for lift.

Your campaign objective, budget size, access to data, and platform limitations (such as SKAN on iOS) will influence how you construct your test, and therefore, what kind of insights you get in return.

Here are the most common forms of incrementality testing, as well as how each one works, their relative strengths and weaknesses, and when you should use them.

1. Geo-Based Incrementality Testing

Your campaign is set to launch in select geo regions (e.g., test markets) while further geo regions purposely remain only in control markets. Assuming you control for baseline trends, and compare performance across regions, you have an estimate for incremental lift. These are good for broad reach campaigns, paid social, big programmatic budget. The reason why it works well is because geos can be naturally isolated and compare at scale. It is not necessary to have user level data, but you only need regional KPIs.

2. PSA (Public Service Announcement) Testing

Instead of holding users out entirely, control group users see a non-promotional message (like a public service ad). This ensures the ad placement is still occupied but not pushing your product or offer. It is ideal for environments where inventory must be filled, such as YouTube, OTT, or programmatic channels.

3. Ghost Ad Testing

Users in both groups “win” the ad auction, but the control group’s ad is suppressed at delivery, which means the platform logs the impression, but the user doesn’t actually see the ad. This design perfectly isolates the effect of seeing the ad from just being in the bid pool. It mimics the test-control design without wasting spend on PSA creatives.

4. Suppression Holdout Testing

A fixed percentage of your eligible users (e.g., 10%) are intentionally excluded from the campaign. The rest receive normal exposure. After a defined time window, you compare conversion rates. It is simple and effective, and works especially in owned channels where you control delivery. Ideal for retention, upsell, and re-engagement strategies.

5. Time-Based Testing

The same campaign is turned off and on over alternating time periods. You compare conversions across those time windows to measure lift. It is useful when audiences can’t be easily split, such as on limited reach channels.

How to Set Up Your First Incrementality Test (Step-by-Step)

You believe incrementality testing is now the future, and you’re ready to get started. The good news is that you don’t need to be a data scientist or have a million-dollar backing to do a credible incrementality test. However, you do need to approach it in a structured way. Incrementality testing is inherently a structured experiment, as with any great experiment, incrementality testing involves intention, balance, and scientific rigor to set it up and conduct the test properly.

Below is an actionable step-by-step playbook to help you get your first test going, aimed at the mobile marketer who prefers clarity over ambiguity.

Step 1: Start With a Simple, Actionable Hypothesis

All good incrementality tests starts with a focused question of what you are trying to prove. The best hypotheses are specific, measurable, and tied to the objective of your campaign. Let’s say you are running a push notification based campaign to reactivate churned users. A strong hypothesis would be, “Sending a time-sensitive discount messages to churned users will lead to a higher reactivation rate than churned users who do not see the message.” Avoid ambiguous goals like “increase engagement.” Make sure you identify the conversion event (e.g., app install, purchase, reactivation), identify the audience, and describe what effect you expect.

Step 2: Choose a Testing Method That Matches Your Use Case

Not all incrementality tests are created equal. Your testing setup should take into consideration variables such as type of campaign, tools available, media channels and level of targeting control.

- If you’re testing broad acquisition in multiple markets, you could attempt geo-based testing.

- If you are using CTV ads or in-app banners, PSA-based tests would be good for ownership of the creative, and ensure your control group sees something neutral, without influence.

- For retargeting or CRM calculating and creating bases for CRM things like emails or pushes, suppression holdouts anywhere a portion of your audience was excluded would be fine.

- If using a platform like Meta or a DSP that allows ghost ad setups, in which you can suppress delivery of your control group but allow them to still take part in the auction.

- When it is not possible to segment audiences, time-based tests where you toggle your campaign off and on by defined intervals could work for you, giving directional lift signals.

The point is that test design should fit your environment, not the other way around.

Step 3: Segment Your Audience Cleanly

Once you’ve selected your test format, the next step is segmentation. This is where many tests fall apart. If your test and control groups are not comparable, your results will guarantee little value. You will want to segment your audience in two statistically similar cohorts. The only actual difference between them should be ad exposure.

Maintain a balance of factors such as platform (iOS vs. Android), user lifecycle stage, geography, and expected conversion intent. If you’re conducting a geo test, have a similar historical behavior and seasonality for the test and control regions.

Randomization and scale is important here. A test with 200 users will not provide the statistical power you need. You should be testing with thousands, preferably tens of thousands, per group to provide useful insight.

Step 4: Launch and Monitor Your Campaign Carefully

Now that you have your groups created, it’s finally time to run the actual campaign. Make sure that only your test groups is served your campaign creatives. If you’re running it in a holdout or ghost ad method, ensure your control group is not served any impressions of these ads.

If possible, run your campaign for long enough to collect meaningful data in terms of behaviors. For actions such as installs or clicks, which are typically more upper funnel, 7-10 days might be sufficient. For actions like purchases of subscriptions, which are more lower funnel, you might need to run the campaign for 14-21 days depending on how long your user journey is.

During the campaign run, do not make any mid-test changes to creative, targeting, or even your budget split, unless you are going to restart that test. It is preferable to have as few changes as possible to eliminate extraneous variables and to ensure that you are only measuring the impact of the campaign creative.

Step 5: Measure Lift and Make It Meaningful

Ultimately, the end of the campaign is time for analysis. You should see how the conversion rates of your test and control groups compare. The difference is your incremental lift, which is the value you created from your campaign.

If your test group had a reactivation of 5.8% and your control had a reactivation of 4.2%, you had a 1.6% lift. This is the 1.6% that the campaign actually gave you, above the baseline, and that is your evidence of impact. You could also calculate the Cost per Incremental Outcome, if you divided total spend by the number of incremental conversions. This also provides a way to compare the true ROI of different campaigns, across channels and formats.

You could also calculate the Cost per Incremental Outcome, if you divided total spend by the number of incremental conversions. This also provides a way to compare the true ROI of different campaigns, across channels and formats.

Step 6: Interpret, Iterate, and Don’t Just Chase Success

The last, and sometimes most disregarded, step is interpretation. Your role is not only to confirm lift. Your role is to understand why lift occurred and what your next move should be.

If the test did show lift, that’s great. Is it possible to scale the campaign or use the same creative thinking as it relates to another campaign? If the test failed to show lift, that is still valuable. You have saved future budget by confirming what does not drive results. Those insights could be used to optimize creative, rethink targeting, and adjust spend levels.

And very importantly, continue building a culture of testing. Incrementality should not be viewed as a one-off diagnostic tool. The most successful mobile marketers incrementality test consistently, each quarter, each large campaign, and prior to any large shifts in budget.

How Incrementality Testing Optimizes Your Ad Spend

The way to make every dollar you have in a post-attribution world is a harder thing to do.

Performance marketing used to be simple. You would go to your attribution dashboard to see where conversions belong and spend accordingly. Now, it’s not that easy and ad spends are also harder.

Budgets are being scrutinized now more than ever. CPIs are increasing, user acquisition is competitive, and privacy frameworks have changed the way you measure success. Marketers are making a move from the basic attribution of conversion counts to proof of conversions and related behavior by using incrementality testing.

Marketers are using lift data to put more accurate spend allocations, eliminate waste, and create strategies that are actually yielding return on investment, and not just the appearance of return.

Shifting From Vanity Metrics to Value Metrics

Often times typical metrics like impressions, clicks, or even attributed installs don’t represent true impact. If a user was always going to convert and your ad stepped in along the way, then you didn’t create value, you simply took credit.

Incrementality turns this mindset upside down. You can see where true growth is happening, where your campaigns are driving net-new behavior, versus where you are just paying incrementally for that organic or brand driven conversion.

This means you are now moving from optimizing for volume to volume for value.

Minimize Wasted Spend on Overlapping Channels

You could easily be running user acquisition campaigns across five paid channels. Attribution shows conversions across all five paid channels, and consequently you assume each is driving results. But after incrementality testing, you find two of them are cannibalizing organic users, or even worse, are adding to overall performance with little to no incremental impact.

That type of insight can save you thousands, and sometimes millions, in spend. It helps you:

- Identify channels to prune that deliver minimal lift.

- Reinvest in high lift channels, especially if attribution is significantly biased.

- Ensure you’re not paying for the same user across two overlapping platforms.

You are no longer guessing media overlap, but you are looking at how each channel works independently, and how they work in combination.

Justify Budget to Leadership with Confidence

Marketing teams are being asked to substantiate they are not wasting spend. Often times, attribution data alone is not appropriate for the boardroom. Incrementality data is.

It arms you with:

- Proof that X% of conversions wouldn’t have occurred without your campaign

- Understanding what tactics lead to real business impact, versus attributed clicks

- An accurate, privacy-compliant measurement model that is consistent with where the industry is going

This is especially important in Q1. FY25 budgets get rolled out and finance teams will tighten the screws. Being able to speak about causal ROI will put you in a stronger place to earn the right to defend current expenditure – or to secure a greater budget if needed.

Making Incrementality Testing a Habit: How to Operationalize It in Q1

Most marketers have run an incrementality test once. They got a lift number, filed a deck, may have moved a budget line, and nothing after that.

But the real opportunity to make incrementality a forever process at scale across all campaigns, channels, and quarters is here. And this time, not as a side project, but as the default one.

Here is how to operationalize incrementality testing to help make this a Q1 FY25 growth engine, not just a lone experimentation activity.

Start by Embedding It in Your Campaign Lifecycle

Incrementality testing shouldn’t live in a silo or be saved for “special” campaigns. It needs to show up at three core phases of your marketing process:

- Pre-Launch Planning

- Define your goal (installs, subscriptions, reactivations)

- Build a hypothesis to test

- Allocate 5–10% of your audience or budget for holdout/control

- Define your goal (installs, subscriptions, reactivations)

- Mid-Campaign Optimization

- Run ongoing tests on creatives, messaging, and offers

- Evaluate incremental lift for each cohort

- Pause or scale based on causal performance, not just attributed results

- Run ongoing tests on creatives, messaging, and offers

- Post-Campaign Analysis

- Review performance across both test and control

- Calculate true cost-per-incremental-action

- Feed insights into your next media plan

- Review performance across both test and control

This loop turns incrementality into a feedback engine that powers better decisions across quarters.

Designate Ownership with Your Team

The biggest barrier to operationalizing testing is not technical but organizational. The best-performing teams assign a Testing Owner, who is someone who is responsible for:

- Setting quarterly testing priorities

- Designing and managing test-control splits

- Centralizing learnings into a playbook

- Working across teams to apply those learnings

It does not have to be a new hire. It can be a role within your performance team. The key is clarity and accountability. When testing is everyone’s responsibility, it usually becomes no one’s responsibility.

Build a Testing Calendar, Not Just One-Offs

Just like you plan campaigns for quarters or sprints, so should you plan testing too. Create a Q1 testing roadmap that includes a cadence of experiments mapped to key business priorities.

For example:

- Week 1–3: Test incrementality of brand video ads across geos

- Week 4–6: Run holdout test on push campaigns for dormant users

- Week 7–9: Compare creative A/B variants using ghost ad suppression

- Week 10–12: Test incremental ROAS across SKAN-eligible networks

This gives you some wiggle room to budget testing ahead of time and makes sure experiments aren’t deprioritized during busy campaign cycles.

Set Internal Benchmarks for Incrementality

Over time, as you run more tests, you’ll begin to create internal benchmarks. You will understand what lift means for you, your brand, your product, and audience. But more importantly, you will start to identify trends.

For example:

- UA campaigns on Android generate 3–5% incremental lift

- Winback offers via push drive 10–15% lift among users having been inactive for 30+ days

- CTV ads deliver lower attributed conversions, but 2x higher incremental reactivation

These are useful numbers for when you’re assessing new campaigns with sharper eyes.

Make a Living Playbook of Learnings

Every test, successful or not, builds your knowledge base. Don’t let the data die in a deck. Record each data and its outcome, and create a living testing playbook for your team. Include in the playbook:

- The test hypothesis and structure

- The creative/audience/channel tested

- Results and lift observed

- Next steps or recommended action

Lift Is the New Truth. Are You Measuring It Yet?

Mobile marketing has changed in a way that attribution is no longer enough. With user-level signals declining, overlaps increasing and budgets getting tighter, the question is no longer just where this user came from. The more important question now is if the would have converted without that spend.

That is what incrementality testing answers, not by instinct or guesses but with a structured and statistically sound experiment. It helps clarify signals from noise, strategy from misdirection, and outcomes from dashboards that just give convenient outcomes.

The cherry on top is that you don’t need to throw away your stack, create an acquisition lab of PhDs and only run tests every once in a while. What you really need is a repeatable, operational model, with a culture that not only doesn’t chase attribution, but actively challenges it.

Whether you are scaling a new acquisition channel, leaning into CTV or simply trying to justify your Q1 budgets, incrementality gives you the data story that actually stacks up. It is not just proving value, but learning how to create value, and doing more of that.

At Apptrove, we think incrementality testing should be as easy as attribution once was. That’s why we are helping mobile marketers with the right tools and more confidence, to embed incrementality across their growth workflows.

Because in FY25, if you are marketing without incrementality, you are simply spending. And with incrementality testing, It’s performance, verified. Get started today with Apptrove!